Research Paper Review - Scaling Memcached at Meta

This post is about my understanding on “Scaling Memcached at Facebook” by Meta. The paper discusses the challenges faced by Facebook in scaling Memcached, an open-source, high-performance, distributed memory object caching system. Reading a bunch of these papers was a part of my coursework, and I thought it would be a good idea to share my understanding.

Introduction to Memcached

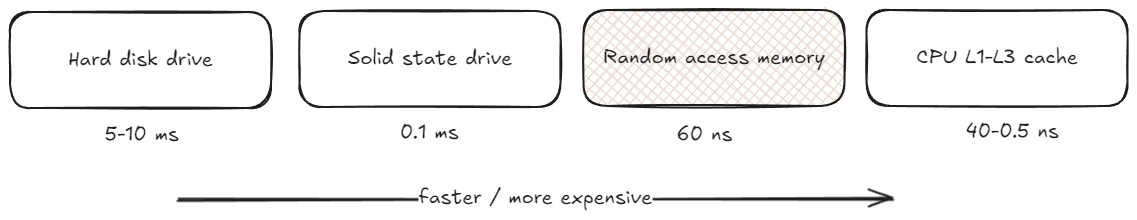

Memcached is an in-memory database. Which means, it operates and stores information in the system memory rather than on the disk. This by definition improves latency and throughput.

Comparison of Storage and Memory Access Speeds

Comparison of Storage and Memory Access Speeds

What does a single cluster look like?

There are 3 main components in a single cluster.

- Region

- Primary Regions: Responsible for handling majority of the traffic.

- Secondary Regions: These are the replicas of the primary regions.

- Storage cluster

- Database: This is where the data is stored. It is the source of truth.

High-level architecture of a single cluster

High-level architecture of a single cluster

Challenges faced by Facebook

Adding or removing memcached servers

As I previously mentioned, you can have hundreds of memcached servers for one single webserver. And the keys are distributed among them. So how do you efficiently add or remove a memcached server?

They use a technique called consistent hashing. This is a technique that maps keys to servers in such a way that when a server is added or removed, only a small fraction of keys are remapped.

Batching requests

When a webserver receives a request, it sends a request to the memcached server. This is a network call. And network calls are expensive. So, how do you reduce the number of network calls? You group them and send them in batches. This is called batching requests.

UDP for GET requests

TCP calls are expensive. They involve a handshake and a teardown. So, for GET requests, they swapped out TCP with UDP. UDP is connectionless and lightweight. The tradeoff is that it is unreliable. But in that case, it is simply marked as a cache miss. And this is a tradeoff they were willing to make.

Stale sets and thundering herds

In a highly concurrent and large scale distributed system such, there is a good chance that data becomes stale. And when it does, you need to invalidate it. But how do you invalidate it without causing a thundering herd?

They use a technique called leasing mechanism. According to this mechanism, any new cache update is only accepted if the lease token is verified. The assignment of these lease tokens can done with a delay of 5 seconds to avoid thundering herds.

Optimizations to the Memcached binary

Automatic hash table resizing

When you talk about hash-tables all of them have something called as a load factor. When the load factor exceeds a certain threshold, the hash table is doubled in size.

Multi-threaded architecture

Instead of allowing Memcached to operate on a single core, they made it multi-threaded. This way, they could handle concurrent requests.

Adaptive slab allocator

A system’s available memory can be divided into fixed size chunks called slabs. These slabs are further split into partitions of unit size. This allocator is capable of dynamically adjusting the slab size based on the requests that are being received, minimizing memory wastage.

Conclusion

I tried to cover the main points from the paper without going too much into detail. The paper is a good starting point if you are interested in learning more about high level system design and its practical implications.